Code Sample/Portfolio

By Kexing Yu

This page includes two personal projects, MadParticle and NotEnoughBandwidth (still in active developing), both are Minecraft Java Edition mods. I am the lead creator of these projects, responsible for planning, design, programming, publication, and maintenance. Both projects are still under active development.

MadParticle

MadParticle was originally designed as a decorative particle mod for Minecraft, allowing players to create visually rich in-game scenes through a large number of configurable parameters.

Unlike particle systems in Unreal or Unity, Minecraft's particles are relatively heavier, which function more like client-side actors/entities than VFX effects. Therefore, MadParticle also provides a highly optimized particle system that replaces the vanilla particle rendering and computation, delivering a 15–20x performance improvement.

If intersted, the complete source code of the project is available at https://github.com/USS-Shenzhou/MadParticle (My online alias is USS_Shenzhou).

Demonstration video

This short video showcases several highlights of MadParticle. Additionally, a few historical videos of MadParticle are provided below:

- Initial announcation video on Youtube

- 0.5 version update on Youtube

- 0.5 performance comparison on Bilibili (not translated yet)

- 0.8 version update on Bilibili (not translated yet)

- Third anniversary/1.0 version update on Bilibili (not translated yet)

And there is a list of my other projects on the Home page.

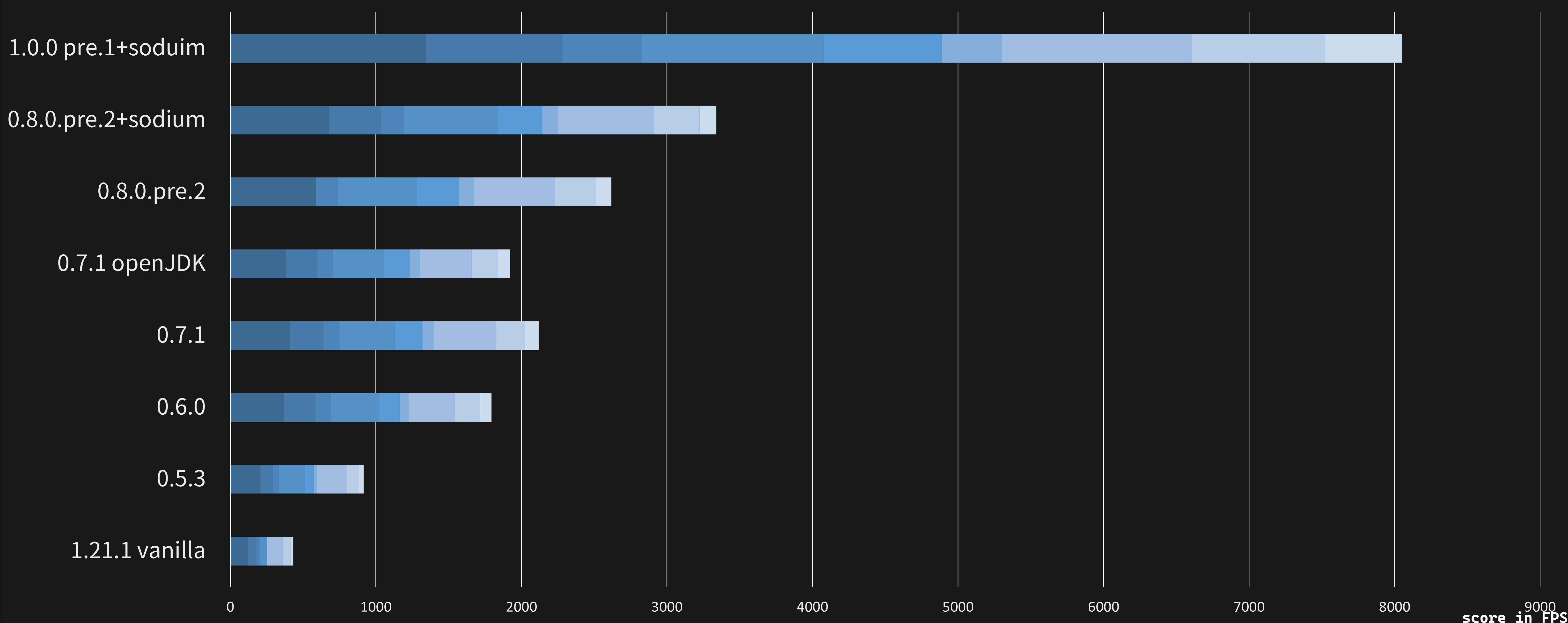

Performance comparison

In response to player and other developers' feedback, I began optimizing the vanilla particle system. The current version of MadParticle now achieves a 10–20x performance improvement over the vanilla Minecraft implementation.

This image shows the performance of MadParticle (including historical versions) across nine pre-defined test scenarios, covering multiple dynamic scenes with 50k to 200k particles rendered simultaneously. The total sum of average FPS across all scenarios represents the performance score. The bottom row shows the performance of vanilla Minecraft.

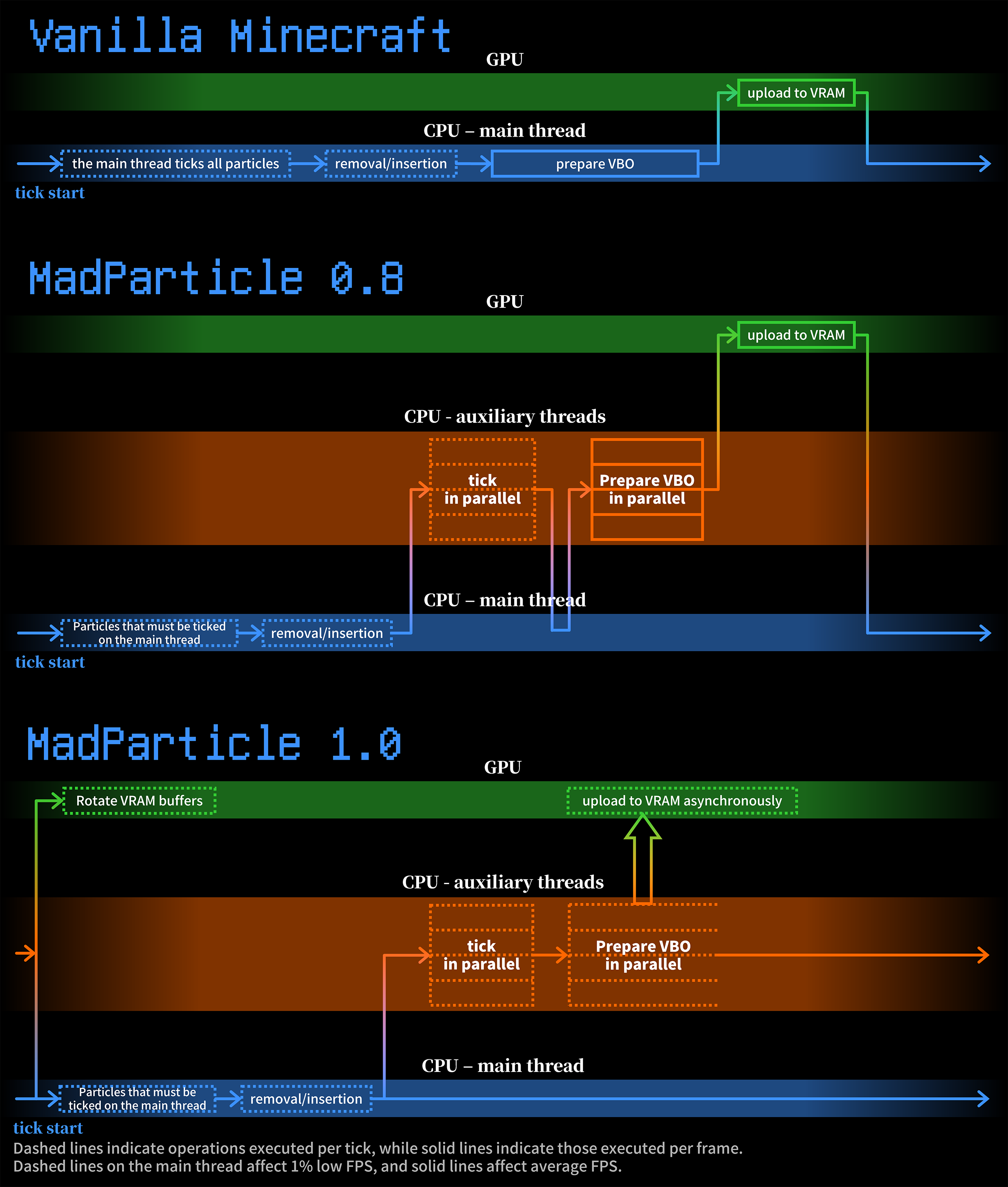

Structure evolvement

The MadParticle project has been under active development for three years, and the version shown below represents the latest version.

This image illustrates a major recent architectural overhaul of MadParticle compared to the vanilla system — shifting from a mainly main-thread architecture to an almost fully multithreaded one.

NeoInstancedRenderManager

NeoInstancedRenderManager is a highly optimized particle rendering system primarily based on instanced rendering, with some specializations tailored to the Minecraft rendering pipeline.

Although instanced rendering significantly reduces the overall rendering time, filling the VBO still consumes substantial processing resources. To address this, parallel VBOs were introduced, where each thread is preassigned a starting address and a task of roughly equal size (see MultiThreadedEqualObjectLinkedOpenHashSetQueue below).

Additionally, a ring-based OpenGL PersistentMappedBuffer is employed to eliminate blocking during data uploads. Through these designs, this class maximizes hardware utilization (excluding hyper-threading considerations) and achieves performance far exceeding that of vanilla Minecraft.

NeoInstancedRenderManager.java

// The import statements at the beginning of classes have been omitted.

/**

* @author USS_Shenzhou

*/

public class NeoInstancedRenderManager {

private final ResourceLocation usingAtlas;

public NeoInstancedRenderManager(ResourceLocation usingAtlas) {

this.usingAtlas = usingAtlas;

}

//----------meta manager----------

/**

* 0 is for {@link net.minecraft.client.renderer.texture.TextureAtlas#LOCATION_PARTICLES},

* <br>

* 1 is for {@link net.minecraft.client.renderer.texture.TextureAtlas#LOCATION_BLOCKS}.

*/

@SuppressWarnings("deprecation")

private static final NeoInstancedRenderManager[] MANAGER_BY_RENDER_TYPE = new NeoInstancedRenderManager[]{

new NeoInstancedRenderManager(TextureAtlas.LOCATION_PARTICLES),

new NeoInstancedRenderManager(TextureAtlas.LOCATION_BLOCKS),

};

public static NeoInstancedRenderManager getInstance(ParticleRenderType renderType) {

if (renderType == ParticleRenderType.TERRAIN_SHEET || renderType == ModParticleRenderTypes.INSTANCED_TERRAIN) {

return MANAGER_BY_RENDER_TYPE[1];

}

return MANAGER_BY_RENDER_TYPE[0];

}

public static Stream<NeoInstancedRenderManager> getAllInstances() {

return Stream.of(MANAGER_BY_RENDER_TYPE);

}

public static void forEach(Consumer<? super NeoInstancedRenderManager> consumer) {

consumer.accept(MANAGER_BY_RENDER_TYPE[0]);

consumer.accept(MANAGER_BY_RENDER_TYPE[1]);

}

public static void init() {

LogUtils.getLogger().info("NeoInstancedRenderManager inited.");

}

//----------render----------

/**

* <pre>{@code

* //-----per tick update-----

* //single float

* layout (location=0) in vec4 instanceXYZRoll;

* //single float

* layout (location=1) in vec4 prevInstanceXYZRoll;

* //half float

* layout (location=2) in vec4 instanceUV;

* //half float

* layout (location=3) in vec4 instanceColor;

* //half float

* layout (location=4) in vec2 sizeExtraLight;

* //(4+4 bits) 1 byte + 3 byte padding

* layout (location=5) in uint instanceUV2;

* }</pre>

*/

private static final int TICK_VBO_SIZE = 8 * 4 + 4 * 2 + 4 * 2 + 2 * 2 + 4;

private static final LightCache LIGHT_CACHE = new LightCache();

private static boolean forceMaxLight = false;

private static final GpuBuffer PROXY_VAO = ModRenderPipelines.INSTANCED_COMMON_DEPTH.getVertexFormat().uploadImmediateVertexBuffer(ByteBuffer.allocateDirect(128));

private static final GpuBuffer EBO;

private static final short DEFAULT_EXTRA_LIGHT = Float.floatToFloat16(1f);

private final PersistentMappedArrayBuffer tickVBO = new PersistentMappedArrayBuffer();

private int amount, nextAmount;

private volatile CompletableFuture<Void> updateTickVBOTask = null;

private final MappableRingBuffer cameraCorrectionUbo = new MappableRingBuffer(() -> "MadParticle CameraCorrection Uniform",

GpuBuffer.USAGE_UNIFORM | GpuBuffer.USAGE_MAP_WRITE,

(4 + 4) * 4);

static {

NeoForge.EVENT_BUS.addListener(NeoInstancedRenderManager::checkForceMaxLight);

var eboBuffer = BufferUtils.createByteBuffer(6 * 4);

eboBuffer.putInt(0);

eboBuffer.putInt(1);

eboBuffer.putInt(2);

eboBuffer.putInt(2);

eboBuffer.putInt(1);

eboBuffer.putInt(3);

eboBuffer.flip();

EBO = ModRenderPipelines.INSTANCED_COMMON_DEPTH.getVertexFormat().uploadImmediateIndexBuffer(eboBuffer);

}

@SubscribeEvent

public static void checkForceMaxLight(ClientTickEvent.Pre event) {

forceMaxLight = ConfigHelper.getConfigRead(MadParticleConfig.class).forceMaxLight;

}

public void render() {

if (amount == 0) {

return;

}

var mc = Minecraft.getInstance();

var encoder = RenderSystem.getDevice().createCommandEncoder();

var cameraUbo = encoder.mapBuffer(cameraCorrectionUbo.currentBuffer(), false, true);

var dynamicUbo = RenderSystem.getDynamicUniforms().writeTransform(RenderSystem.getModelViewMatrix(), new Vector4f(1.0F, 1.0F, 1.0F, 1.0F), new Vector3f(), new Matrix4f(), 0.0F);

//noinspection DataFlowIssue

var pass = encoder.createRenderPass(

() -> "MadParticle render",

//TODO support OIT/renderPass twice

mc.getMainRenderTarget().getColorTextureView(),

OptionalInt.empty(),

mc.getMainRenderTarget().getDepthTextureView(),

OptionalDouble.empty());

try (pass; cameraUbo) {

pass.setPipeline(getRenderPipeline());

setUniform(pass, mc, cameraUbo, dynamicUbo);

setVAO(pass);

tickVBO.getCurrent().bind();

pass.setIndexBuffer(EBO, VertexFormat.IndexType.INT);

bindIrisFBO();

pass.drawIndexed(0, 0, 6, amount);

}

cleanUp();

}

public void preUpdate() {

if (updateTickVBOTask != null) {

updateTickVBOTask.join();

tickVBO.next();

}

}

public void update(MultiThreadedEqualObjectLinkedOpenHashSetQueue<Particle> particles) {

amount = nextAmount;

nextAmount = particles.size();

tickVBO.ensureCapacity(TICK_VBO_SIZE * particles.size());

}

public void postUpdate(MultiThreadedEqualObjectLinkedOpenHashSetQueue<Particle> particles) {

updateTickVBOTask = executeUpdate(particles, this::updateTickVBOInternal, tickVBO);

}

private RenderPipeline getRenderPipeline() {

return switch (ConfigHelper.getConfigRead(MadParticleConfig.class).translucentMethod) {

case DEPTH_TRUE -> ModRenderPipelines.INSTANCED_COMMON_DEPTH;

case DEPTH_FALSE -> ModRenderPipelines.INSTANCED_COMMON_BLEND;

default -> ModRenderPipelines.INSTANCED_COMMON_DEPTH;

};

}

private void setVAO(RenderPass pass) {

pass.setVertexBuffer(0, PROXY_VAO);

getDevice().vertexArrayCache().bindVertexArray(getRenderPipeline().getVertexFormat(), (GlBuffer) PROXY_VAO);

setVertexAttributeArray();

}

private void setUniform(RenderPass pass, Minecraft mc, GpuBuffer.MappedView ubo, GpuBufferSlice dynamicUbo) {

RenderSystem.bindDefaultUniforms(pass);

pass.setUniform("DynamicTransforms", dynamicUbo);

var camera = mc.gameRenderer.getMainCamera();

var cameraPos = camera.getPosition();

var cameraRot = camera.rotation();

Std140Builder.intoBuffer(ubo.data())

.putVec4(cameraRot.x, cameraRot.y, cameraRot.z, cameraRot.w)

.putVec4((float) cameraPos.x, (float) cameraPos.y, (float) cameraPos.z, mc.getDeltaTracker().getGameTimeDeltaPartialTick(false));

pass.setUniform("CameraCorrection", cameraCorrectionUbo.currentBuffer());

pass.bindSampler("Sampler0", mc.getTextureManager().getTexture(usingAtlas).getTextureView());

pass.bindSampler("Sampler2", mc.gameRenderer.lightTexture().getTextureView());

}

private void updateTickVBOInternal(ObjectLinkedOpenHashSet<TextureSheetParticle> particles, int index, long tickVBOAddress, @Deprecated float partialTicks) {

var simpleBlockPosSingle = new SimpleBlockPos(0, 0, 0);

for (TextureSheetParticle particle : particles) {

long start = tickVBOAddress + (long) index * TICK_VBO_SIZE;

//xyz roll

MemoryUtil.memPutFloat(start, (float) particle.x);

MemoryUtil.memPutFloat(start + 4, (float) particle.y);

MemoryUtil.memPutFloat(start + 8, (float) particle.z);

MemoryUtil.memPutFloat(start + 12, particle.roll);

//prev xyz roll

MemoryUtil.memPutFloat(start + 16, (float) particle.xo);

MemoryUtil.memPutFloat(start + 20, (float) particle.yo);

MemoryUtil.memPutFloat(start + 24, (float) particle.zo);

MemoryUtil.memPutFloat(start + 28, particle.oRoll);

//uv

MemoryUtil.memPutShort(start + 32, Float.floatToFloat16(particle.getU0()));

MemoryUtil.memPutShort(start + 34, Float.floatToFloat16(particle.getU1()));

MemoryUtil.memPutShort(start + 36, Float.floatToFloat16(particle.getV0()));

MemoryUtil.memPutShort(start + 38, Float.floatToFloat16(particle.getV1()));

//color

MemoryUtil.memPutShort(start + 40, Float.floatToFloat16(particle.rCol));

MemoryUtil.memPutShort(start + 42, Float.floatToFloat16(particle.gCol));

MemoryUtil.memPutShort(start + 44, Float.floatToFloat16(particle.bCol));

MemoryUtil.memPutShort(start + 46, Float.floatToFloat16(particle.alpha));

//size extraLight

MemoryUtil.memPutShort(start + 48, Float.floatToFloat16(particle.getQuadSize(0.5f)));

if (particle instanceof MadParticle madParticle) {

MemoryUtil.memPutShort(start + 50, Float.floatToFloat16(madParticle.getBloomFactor()));

} else {

MemoryUtil.memPutShort(start + 50, DEFAULT_EXTRA_LIGHT);

}

//uv2

if (forceMaxLight) {

MemoryUtil.memPutByte(start + 52, (byte) 0xff);

} else {

float x = (float) (particle.xo + 0.5f * (particle.x - particle.xo));

float y = (float) (particle.yo + 0.5f * (particle.y - particle.yo));

float z = (float) (particle.zo + 0.5f * (particle.z - particle.zo));

simpleBlockPosSingle.set(Mth.floor(x), Mth.floor(y), Mth.floor(z));

byte l;

if (particle instanceof MadParticle madParticle) {

l = LIGHT_CACHE.getOrCompute(simpleBlockPosSingle.x, simpleBlockPosSingle.y, simpleBlockPosSingle.z, particle, simpleBlockPosSingle);

l = madParticle.checkEmit(l);

} else if (TakeOver.RENDER_CUSTOM_LIGHT.contains(particle.getClass())) {

l = LightCache.compressPackedLight(particle.getLightColor(partialTicks));

} else {

l = LIGHT_CACHE.getOrCompute(simpleBlockPosSingle.x, simpleBlockPosSingle.y, simpleBlockPosSingle.z, particle, simpleBlockPosSingle);

}

MemoryUtil.memPutByte(start + 52, l);

}

index++;

}

}

@SuppressWarnings("unchecked")

private CompletableFuture<Void> executeUpdate(MultiThreadedEqualObjectLinkedOpenHashSetQueue<Particle> particles, VboUpdater updater, PersistentMappedArrayBuffer vbo) {

var partialTicks = Minecraft.getInstance().getDeltaTracker().getGameTimeDeltaPartialTick(false);

CompletableFuture<Void>[] futures = new CompletableFuture[getThreads()];

int index = 0;

for (int group = 0; group < futures.length; group++) {

@SuppressWarnings("rawtypes")

ObjectLinkedOpenHashSet set = particles.get(group);

int i = index;

futures[group] = CompletableFuture.runAsync(

() -> updater.update((ObjectLinkedOpenHashSet<TextureSheetParticle>) set, i, vbo.getNext().getAddress(), partialTicks),

getFixedThreadPool()

);

index += set.size();

}

return CompletableFuture.allOf(futures);

}

private void setVertexAttributeArray() {

tickVBO.getCurrent().bind();

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 4, GL_FLOAT, false, TICK_VBO_SIZE, 0);

glVertexAttribDivisorARB(0, 1);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 4, GL_FLOAT, false, TICK_VBO_SIZE, 16);

glVertexAttribDivisorARB(1, 1);

glEnableVertexAttribArray(2);

glVertexAttribPointer(2, 4, GL_HALF_FLOAT, false, TICK_VBO_SIZE, 32);

glVertexAttribDivisorARB(2, 1);

glEnableVertexAttribArray(3);

glVertexAttribPointer(3, 4, GL_HALF_FLOAT, false, TICK_VBO_SIZE, 40);

glVertexAttribDivisorARB(3, 1);

glEnableVertexAttribArray(4);

glVertexAttribPointer(4, 2, GL_HALF_FLOAT, false, TICK_VBO_SIZE, 48);

glVertexAttribDivisorARB(4, 1);

glEnableVertexAttribArray(5);

glVertexAttribIPointer(5, 1, GL_UNSIGNED_BYTE, TICK_VBO_SIZE, 52);

glVertexAttribDivisorARB(5, 1);

}

private void cleanUp() {

cameraCorrectionUbo.rotate();

GlStateManager._glBindVertexArray(0);

glBindBuffer(GL_ARRAY_BUFFER, 0);

glDepthMask(true);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

public int getAmount() {

return amount;

}

@FunctionalInterface

public interface VboUpdater {

void update(ObjectLinkedOpenHashSet<TextureSheetParticle> particles, int startIndex, long frameVBOAddress, float partialTicks);

}

private static GlDevice getDevice(){

var device = RenderSystem.getDevice();

if (device instanceof ValidationGpuDevice validationGpuDevice) {

return (GlDevice) validationGpuDevice.getRealDevice();

} else if (device instanceof GlDevice glDevice) {

return glDevice;

}

throw new IllegalStateException("Unsupported device type: " + device.getClass().getSimpleName());

}

//----------iris----------

private void bindIrisFBO() {

if (!cn.ussshenzhou.madparticle.MadParticle.irisOn) {

return;

}

var program = getDevice().getOrCompilePipeline(getRenderType().renderPipeline).program();

try {

var writingToBeforeTranslucentField = program.getClass().getDeclaredField("writingToAfterTranslucent");

writingToBeforeTranslucentField.setAccessible(true);

var writingToBeforeTranslucent = writingToBeforeTranslucentField.get(program);

var bindMethod = writingToBeforeTranslucent.getClass().getDeclaredMethod("bind");

bindMethod.setAccessible(true);

bindMethod.invoke(writingToBeforeTranslucent);

glDrawBuffer(GL_COLOR_ATTACHMENT0);

} catch (NoSuchFieldException | IllegalAccessException | NoSuchMethodException | InvocationTargetException e) {

LogUtils.getLogger().error(e.toString());

}

}

private RenderType.CompositeRenderType getRenderType() {

return (RenderType.CompositeRenderType) (usingAtlas == TextureAtlas.LOCATION_BLOCKS ? ParticleRenderType.TERRAIN_SHEET.renderType() : ParticleRenderType.PARTICLE_SHEET_TRANSLUCENT.renderType());

}

}

MultiThreadedEqualObjectLinkedOpenHashSetQueue

MultiThreadedEqualObjectLinkedOpenHashSetQueue is a multithreaded container, essentially composed of an array of ObjectLinkedOpenHashSet.

Specifically:

- The Minecraft source code requires that the particle container implement the

EvictingQueueinterface. ObjectLinkedOpenHashSetfrom the Fastutil library provides several critical features:- When the number of particles exceeds the predefined limit, older particles are removed in the order of insertion.

- It enables fast lookup/removal, and insertion of particle instances, maximizing throughput for complex scenes.

- The traditional

ForkJoinPoolmechanism incurs significant overhead when handling large amount of small tasks. By preallocating elements on insertion through an array structure, this issue can be avoided. Given that the array size is typically close to the number of processor cores, the slightly increased lookup/removal/insertion time compared to a single Set is acceptable.

Building on these features, the container class described below was created to meet MadParticle’s high-performance processing needs.

NeoInstancedRenderManager.java

// The import statements at the beginning of classes have been omitted.

/**

* @author USS_Shenzhou

* @see com.google.common.collect.EvictingQueue

* @see it.unimi.dsi.fastutil.objects.ObjectLinkedOpenHashSet

*/

public class MultiThreadedEqualObjectLinkedOpenHashSetQueue<E> implements Queue<E> {

private static final AtomicInteger ID = new AtomicInteger();

private static final AtomicInteger THREAD_ID = new AtomicInteger();

private final ObjectLinkedOpenHashSet<E>[] sets;

private final int maxSize;

private final ForkJoinPool threadPool;

public MultiThreadedEqualObjectLinkedOpenHashSetQueue(int initialCapacityOfEachThread, int maxSize) {

this.maxSize = maxSize;

ID.getAndIncrement();

int threads = ConfigHelper.getConfigRead(MadParticleConfig.class).getBufferFillerThreads();

//noinspection unchecked,rawtypes

sets = Stream.generate(()->new ObjectLinkedOpenHashSet())

.limit(threads)

.toArray(ObjectLinkedOpenHashSet[]::new);

threadPool = new ForkJoinPool(threads, pool -> {

ForkJoinWorkerThread thread = ForkJoinPool.defaultForkJoinWorkerThreadFactory.newThread(pool);

thread.setName("MadParticle-MultiThreadedEqualObjectLinkedOpenHashSetQueue-" + ID.get() + "-Thread-" + THREAD_ID.getAndIncrement());

return thread;

}, null, false);

}

public MultiThreadedEqualObjectLinkedOpenHashSetQueue(int maxSize) {

this(128, maxSize);

}

public int remainingCapacity() {

return maxSize - this.size();

}

public ObjectLinkedOpenHashSet<E> get(int i) {

return sets[i];

}

@Override

public int size() {

int size = 0;

for (var hashset : sets) {

size += hashset.size();

}

return size;

}

@Override

public boolean isEmpty() {

return size() == 0;

}

@Override

public boolean contains(Object o) {

if (o == null) {

return false;

}

for (var hashset : sets) {

if (hashset.contains(o)) {

return true;

}

}

return false;

}

@DoNotCall

@Override

public @NotNull Iterator<E> iterator() {

return Iterators.concat(Arrays.stream(sets)

.map(Set::iterator)

.iterator());

}

@SuppressWarnings("NullableProblems")

@Override

public @NotNull Object[] toArray() {

return Arrays.stream(sets).flatMap(Set::stream).toArray();

}

@SuppressWarnings("NullableProblems")

@Override

public @NotNull <T> T[] toArray(@NotNull T[] a) {

if (a.length < this.size()) {

@SuppressWarnings("unchecked")

T[] newArray = (T[]) Array.newInstance(a.getClass().getComponentType(), this.size());

a = newArray;

}

int index = 0;

for (var hashset : sets) {

for (E e : hashset) {

//noinspection unchecked

a[index] = (T) e;

index++;

}

}

return a;

}

@Override

public boolean add(E e) {

if (this.size() >= maxSize) {

return false;

}

var target = sets[0];

for (var hashset : sets) {

if (hashset.size() < target.size()) {

target = hashset;

}

}

return target.add(e);

}

@Override

public boolean remove(Object o) {

for (var hashset : sets) {

if (hashset.remove(o)) {

return true;

}

}

return false;

}

@Override

public boolean containsAll(@NotNull Collection<?> c) {

for (var o : c) {

if (!this.contains(o)) {

return false;

}

}

return true;

}

@Override

public boolean addAll(@NotNull Collection<? extends E> c) {

int index = 0;

int max = maxSize - this.size();

boolean changed = false;

for (var o : c) {

if (index >= max) {

return changed;

}

changed |= sets[index % sets.length].add(o);

index++;

}

return changed;

}

@Override

public boolean removeAll(@NotNull Collection<?> c) {

CompletableFuture<?>[] futures = new CompletableFuture[sets.length];

for (int i = 0; i < sets.length; i++) {

int finalI = i;

futures[i] = CompletableFuture.runAsync(

() -> sets[finalI].removeAll(c),

threadPool

);

}

CompletableFuture.allOf(futures).join();

return true;

}

@Override

public boolean retainAll(@NotNull Collection<?> c) {

throw new UnsupportedOperationException();

}

@Override

public void clear() {

for (var hashset : sets) {

hashset.clear();

}

}

@Override

public boolean offer(E e) {

return add(e);

}

@Override

public E remove() {

var r = poll();

if (r == null) {

throw new NoSuchElementException();

}

return r;

}

@Override

public E poll() {

var target = sets[0];

for (var hashset : sets) {

if (hashset.size() > target.size()) {

target = hashset;

}

}

return target.removeFirst();

}

@Override

public E element() {

var r = peek();

if (r == null) {

throw new NoSuchElementException();

}

return r;

}

@Override

public E peek() {

var target = sets[0];

for (var hashset : sets) {

if (hashset.size() > target.size()) {

target = hashset;

}

}

return target.getFirst();

}

@Override

public void forEach(Consumer<? super E> action) {

CompletableFuture<?>[] futures = new CompletableFuture[sets.length];

for (int i = 0; i < sets.length; i++) {

int finalI = i;

futures[i] = CompletableFuture.runAsync(

() -> sets[finalI].parallelStream().forEach(action),

threadPool

);

}

CompletableFuture.allOf(futures).join();

}

}

NotEnoughBandwidth

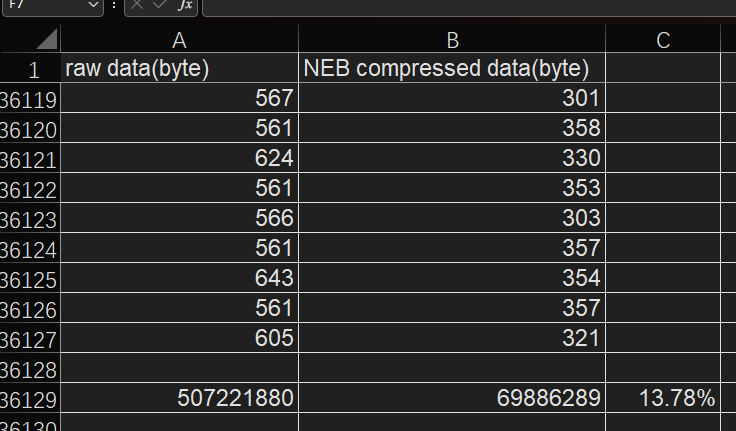

NotEnoughBandwidth (NEB) is a project focused on optimizing network traffic. In vanilla Minecraft, most packets carry less than 10 bytes of actual payload, while a large portion of bandwidth is wasted on TCP headers and application-layer headers. In areas where bandwidth is costly, the vanilla networking mechanism creates significant financial pressure for Minecraft server owners.

NEB aggregates game packets in short intervals, removes redundant headers, replaces string-based packet identifiers with integer indexes, and introduces improved ZSTD compression. These approaches greatly reduce the network bandwidth usage of Minecraft servers.

In the test dataset, NEB can reduce packet bandwidth consumption to 4.4% of vanilla Minecraft; even when compared with vanilla’s built-in gzip compression, it still uses only 11% of the original bandwidth.

| method | C2S(byte) | S2C(byte) | compress ratio (to raw) | compress ratio (to gzip) |

|---|---|---|---|---|

| uncompressed | 1,202,270 | 526,080,229 | 100.00% | |

| vanilla (gzip) | 1,187,810 | 209,674,132 | 39.99% | 100.00% |

| ZSTD+packing | 938,366 | 44,497,817 | 8.62% | 21.55% |

| aggregation+packing+ZSTD | 828,416 | 22,371,107 | 4.40% | 11.00% |

| net package amount | 36,880 | 2,027,018 | 100.00% | |

| net package amount (aggregated) | 28,484 | 38,949 | 3.27% |

NEB has undergone multiple rounds of testing on real multiplayer servers. In production environments, the measured compression ratio typically fluctuates between 14% and 18%, fully achieving its intended design goals.

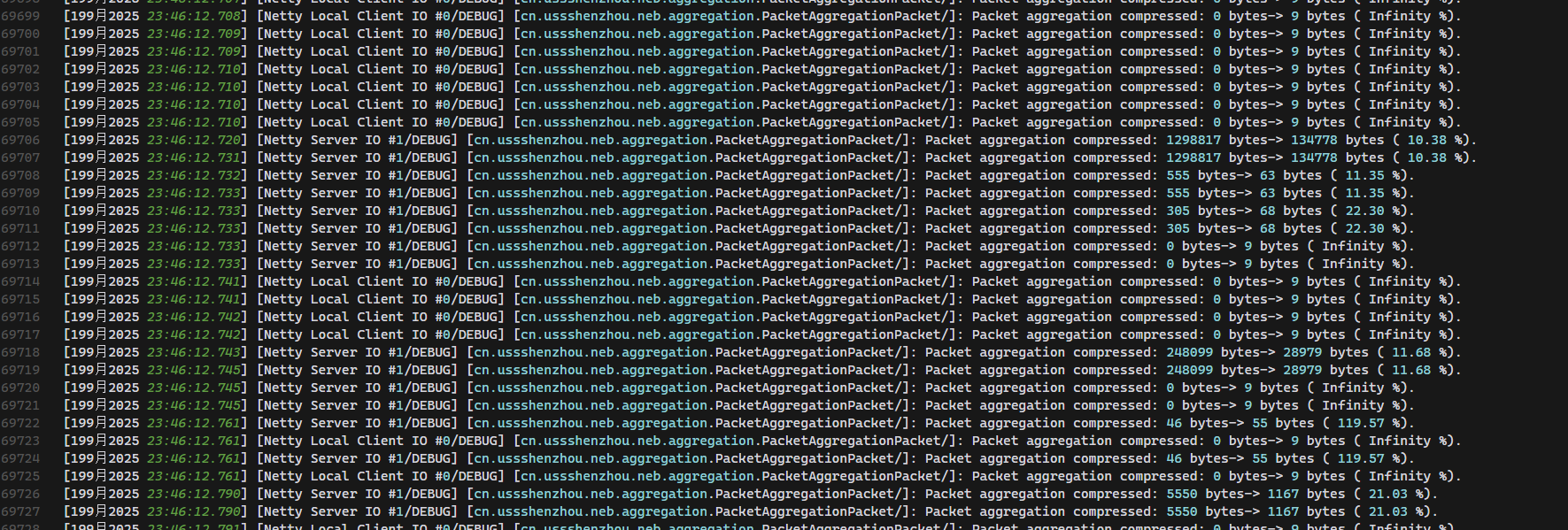

PacketAggregationPacket

PacketAggregationPacket.java is one of the core components of NEB. By introducing a new type of network packet and implementing most relevant logic within it, NEB can perform network optimizations transparently with almost no impact on the behavior of other mods.

PacketAggregationPacket.java

/**

* @author USS_Shenzhou

*/

@MethodsReturnNonnullByDefault

public class PacketAggregationPacket implements CustomPacketPayload {

public static final Type<PacketAggregationPacket> TYPE = new Type<>(ResourceLocation.fromNamespaceAndPath(ModConstants.MOD_ID, "packet_aggregation_packet"));

@Override

public Type<? extends CustomPacketPayload> type() {

return TYPE;

}

private final Map<ResourceLocation, ArrayList<Packet<?>>> packets;

private final FriendlyByteBuf buf;

// only used for encode

private ProtocolInfo<?> protocolInfo;

public PacketAggregationPacket(Map<ResourceLocation, ArrayList<Packet<?>>> packets, ProtocolInfo<?> protocolInfo) {

this.packets = packets;

this.buf = new FriendlyByteBuf(Unpooled.buffer());

this.protocolInfo = protocolInfo;

}

/**

* <pre>

* ┌---┬---┬---┬---┬----┬----┬----┬----┬-...-┬---┬---┬---┬----┬----┬----┬----┐

* │ S │ b │ t │ n │ s0 │ d0 │ s1 │ d1 │ ... │ b │ t │ n │ s0 │ d0 │ s1 │ d1 │

* └---┴---┴---┴---┴----┴----┴----┴----┴-...-┴---┴---┴---┴----┴----┴----┴----┘

* └--------all packets of type A--------┘└-----all packets of type B----┘

* └------------------------------compressed-----------------------------┘

*

* S = varint, size of compressed buf

* b = bool, whether using indexed type

* t = medium or ResLoc, type

* n = varint, subpacket amount of this type

* s = varint, size of this subpacket

* d = bytes, data of this subpacket

* </pre>

*/

public void encode(FriendlyByteBuf buffer) {

FriendlyByteBuf rawBuf = new FriendlyByteBuf(ByteBufAllocator.DEFAULT.buffer());

packets.forEach((tag, packets) -> {

encodePackets(rawBuf, tag, packets);

});

var compressedBuf = new FriendlyByteBuf(ByteBufHelper.compress(rawBuf));

logCompressRatio(rawBuf, compressedBuf);

// S

buffer.writeVarInt(rawBuf.readableBytes());

buffer.writeBytes(compressedBuf);

rawBuf.release();

compressedBuf.release();

}

private static void logCompressRatio(FriendlyByteBuf rawBuf, FriendlyByteBuf compressedBuf) {

if (ConfigHelper.getConfigRead(NotEnoughBandwidthConfig.class).debugLog) {

LogUtils.getLogger().debug("Packet aggregation compressed: {} bytes-> {} bytes ( {} %).",

rawBuf.readableBytes(),

compressedBuf.readableBytes(),

String.format("%.2f", 100f * compressedBuf.readableBytes() / rawBuf.readableBytes())

);

} else {

LogUtils.getLogger().trace("Packet aggregation compressed: {} bytes-> {} bytes ( {} %).",

rawBuf.readableBytes(),

compressedBuf.readableBytes(),

String.format("%.2f", 100f * compressedBuf.readableBytes() / rawBuf.readableBytes())

);

}

}

private void encodePackets(FriendlyByteBuf raw, ResourceLocation type, Collection<Packet<?>> packets) {

int nebIndex = NamespaceIndexManager.getNebIndexNotTight(type);

// b, t

if (nebIndex != 0) {

raw.writeBoolean(true);

raw.writeMedium(nebIndex);

} else {

raw.writeBoolean(false);

raw.writeResourceLocation(type);

}

// n

raw.writeVarInt(packets.size());

for (var packet : packets) {

encodePacket(raw, packet);

}

}

@SuppressWarnings({"unchecked", "rawtypes"})

private void encodePacket(FriendlyByteBuf raw, Packet<?> packet) {

var b = Unpooled.buffer();

protocolInfo.codec().encode(b, (Packet) packet);

// s

raw.writeVarInt(b.readableBytes());

// d

raw.writeBytes(b);

b.release();

}

public PacketAggregationPacket(FriendlyByteBuf buffer) {

this.protocolInfo = null;

this.packets = new HashMap<>();

// S

int size = buffer.readVarInt();

this.buf = new FriendlyByteBuf(ByteBufHelper.decompress(buffer.retainedDuplicate(), size));

buffer.readerIndex(buffer.writerIndex());

}

public void handler(IPayloadContext context) {

this.protocolInfo = context.connection().getInboundProtocol();

while (this.buf.readableBytes() > 0) {

decodePackets(this.buf, context.listener());

}

this.buf.release();

}

private void decodePackets(FriendlyByteBuf buf, ICommonPacketListener listener) {

// b, t

var type = buf.readBoolean()

? NamespaceIndexManager.getResourceLocation(buf.readUnsignedMedium() & 0x00ffffff, false)

: buf.readResourceLocation();

// n

var amount = buf.readVarInt();

for (var i = 0; i < amount; i++) {

decodePacket(buf, listener);

}

}

@SuppressWarnings("unchecked")

private void decodePacket(FriendlyByteBuf buf, ICommonPacketListener listener) {

// s

var size = buf.readVarInt();

// d

var data = buf.readRetainedSlice(size);

var packet = (Packet<ICommonPacketListener>) protocolInfo.codec().decode(data);

packet.handle(listener);

data.release();

}

}